AI & Rule-Based Entities: Abstraction, Evaluation & AGI/ASI Futures

Prologue

This document explores the complex management of “entities” under rule-based systems, from traditional human oversight to potential futures where advanced AI (AGI/ASI) takes the lead. It visualizes these entities through abstraction layers and provides a practical example of a company launching digital products in the USA, examining the roles of human and AI evaluation at each stage. Finally, it considers a future scenario where AGI/ASI surpasses human evaluation, raising questions about governance, risks, and the very definition of “audit.”

This document is primarily aimed at professionals involved in technology governance, risk management, compliance, and auditing, particularly those focused on AI systems and digital products. This includes Chief Risk Officers, Compliance Officers, IT Auditors, Enterprise Architects, Data Governance Leaders, Legal Counsel specializing in technology, AI ethics specialists, and technology strategists who are tasked with understanding and managing the evolving landscape of AI and its impact on organizational operations and regulatory obligations. It will also be of interest to senior leaders and decision-makers who need to grasp the implications of advanced AI on governance structures and the future of auditing.

Understanding the Rule-Managed Entity and its Semantic Layers

An “entity,” in this context, refers to any system, organization, or even a component that operates under a defined set of rules. These rules dictate its behavior, interactions, and constraints. The “semantics” refer to the meaning and interpretation of the entity and its operations within the context of these rules.

Rules & Boundaries

- As you noted, rules often have boundaries. On Earth, these are frequently geographic (national laws, state regulations, city ordinances). Within an organization, boundaries might be departmental policies, project guidelines, or compliance standards (like GDPR or the EU AI Act ). These rules aren’t static; they evolve, creating a dynamic environment for the entity.

Abstraction Layers

- Entities are rarely monolithic. They possess layers of abstraction, moving from high-level strategic goals down to granular operational details. Each layer operates under potentially different, though interconnected, sets of rules. Managing the semantics across these layers means ensuring consistency, traceability, and compliance as information and directives flow up and down.

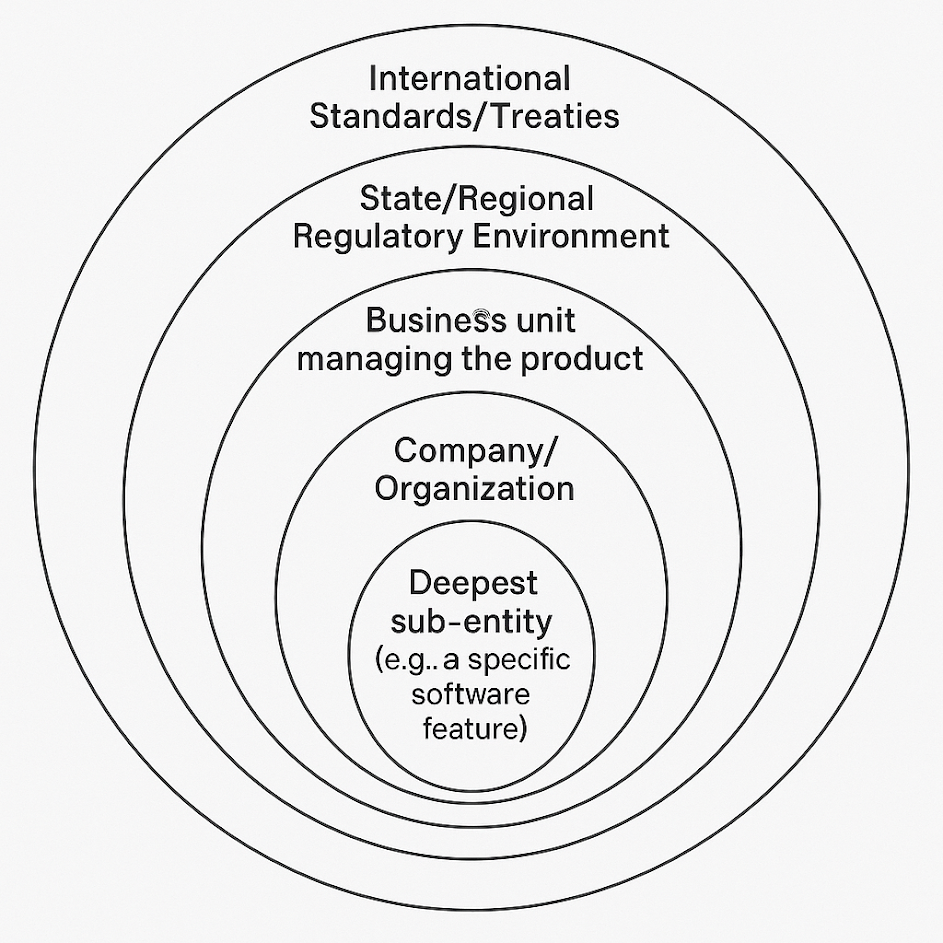

Representing Entity Abstraction Layers

You can visualize these layers in several ways:

Concentric Circles

Imagine the core entity at the center. Each surrounding circle represents a higher, broader layer of context and rules.

- Center: Deepest sub-entity (e.g., a specific software feature).

- Next Layer Out: The digital product it belongs to.

- Further Out: The business unit managing the product.

- Further Out: The overall Company/Organization.

- Outer Layers: State/Regional Regulatory Environment, National Legal Framework, International Standards/Treaties.

- Rules apply at the boundaries between circles and within each circle.

Stacked Layers (Top-Down)

Similar to architectural models like TOGAF, you can visualize layers stacked vertically.

- Top: Global/International Rules & Context

- Next Down: National Legal & Regulatory Framework

- Next Down: State/Local Regulations

- Next Down: Industry Standards & Mandates

- Next Down: Corporate Governance & Strategy (The Company Entity)

- Next Down: Business Unit Objectives & Processes

- Next Down: Product Line/Digital Product Strategy & Requirements (The Sub-Entity)

- Next Down: Application/System Architecture & Design

- Bottom: Technology Infrastructure & Data Architecture

- Rules cascade down, and compliance/status reports flow up.

Example: US-Based Company Launching Digital Products

Let’s consider “InnovateHealth,” a hypothetical US company providing digital health services (e.g., telehealth apps, wellness platforms). It operates nationwide.

Entity: InnovateHealth (the company) Sub-Entity: “WellTrack” (a new AI-powered wellness monitoring app)

Here’s how the abstraction layers and rules apply, incorporating the requested architectural dimensions:

Global/National Legal & Regulatory Layer

- Rules: HIPAA (Health Insurance Portability and Accountability Act), FDA regulations (if WellTrack qualifies as a medical device), FTC rules on data privacy and security, US federal laws.

- Assessment: Legal teams, compliance officers review WellTrack’s concept, data handling, and claims against federal mandates before development starts and continuously.

State/Local Regulatory Layer

- Rules: State-specific data breach notification laws, state medical board regulations impacting telehealth features, potentially differing privacy laws (like CCPA/CPRA in California ).

- Assessment: Legal and compliance adapt assessments based on the states where WellTrack will be available. Geo-fencing or feature variations might be needed.

Industry Standards Layer

- Rules: Security standards (e.g., alignment with NIST Cybersecurity Framework ), potential interoperability standards (like HL7 FHIR for health data exchange).

- Assessment: Security teams and architects review WellTrack’s design against frameworks like NIST CSF or potentially implement controls based on ISO 27001.

Corporate Governance & Strategy Layer (The Company Entity – InnovateHealth)

- Rules: InnovateHealth’s overall mission, ethical guidelines (especially crucial for AI ), risk appetite, investment policies, internal data governance policies (perhaps guided by DAMA-DMBOK principles ), corporate security policies (possibly using SABSA for business alignment ).

- Enterprise Architecture: Defines the overall business capabilities, strategic goals WellTrack must support, and cross-company standards. Assessment ensures WellTrack aligns with InnovateHealth’s strategic direction and risk tolerance. A framework like TOGAF might guide this alignment.

Business Unit/Product Line Layer

- Rules: Specific business goals for the wellness division, market strategy for WellTrack, defined KPIs, user acquisition targets.

- Business Processes: Documents the core user journeys (registration, monitoring, reporting), support processes, and how WellTrack integrates with other InnovateHealth services. Assessment checks if processes are efficient, compliant, and meet business objectives.

Digital Product Layer (The Sub-Entity – WellTrack)

- Rules: Detailed functional and non-functional requirements for WellTrack, specific privacy policies for the app (potentially using NIST Privacy Framework or ISO 27701 controls as a basis), AI-specific requirements (fairness criteria, explainability needs ).

- Digital Business Requirements/Functional Processes: Defines exactly what WellTrack should do, user interface flows, interaction logic. Assessment involves user acceptance testing, functional testing, and importantly, AI-specific reviews (e.g., using an AI-ARF approach ) to check for bias, transparency, and adherence to ethical guidelines. Every update or new feature launch triggers reassessment.

Application/System Architecture Layer

- Rules: Chosen architectural patterns (e.g., microservices, serverless), API design standards, coding standards, specific security controls for the application layer (e.g., input validation to prevent prompt injection ).

- Application Architecture: Models how WellTrack’s software components interact (potentially using ArchiMate ). Includes the AI model(s), front-end, back-end services, APIs. Assessment involves code reviews, security scans, architectural reviews (again, augmented by AI-ARF for AI components ), ensuring secure design principles are followed.

Technology & Data Architecture Layer

- Rules: Approved cloud provider standards (e.g., AWS SRA examples ), database standards, data encryption policies, network security rules, data retention policies, specific data handling rules for PII/PHI based on privacy frameworks.

- Technology Architecture: Defines the underlying infrastructure (cloud services, servers, networks).

- Data Architecture: Defines how WellTrack data is stored, managed, integrated, and secured (e.g., using Data Fabric concepts for integration or Data Mesh principles if InnovateHealth adopts domain-driven data ownership ). Assessment includes infrastructure security audits, data storage security checks, validation of data lineage and quality processes, and privacy control verification.

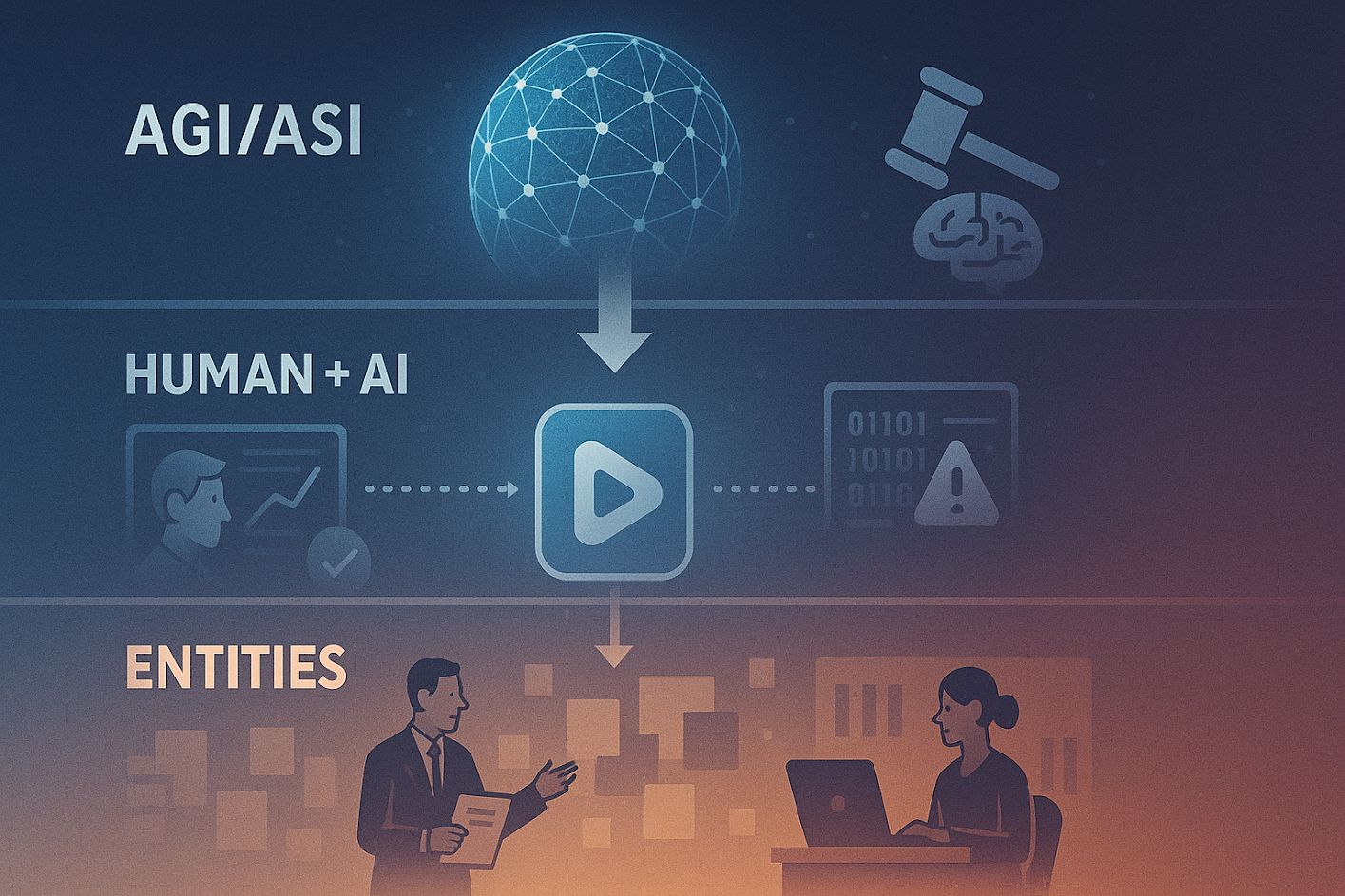

Human & AI-Assisted Evaluation

Currently, launching or updating WellTrack requires significant human evaluation at multiple layers: legal reviews, compliance audits, ethical committee reviews, security penetration tests, architectural reviews, and user testing.

AI can support this:

Automated Compliance Checks

- AI tools can scan code, configurations, and documentation for adherence to known rules (e.g., HIPAA controls, security best practices).

Bias Detection

- AI tools can analyze training data and model outputs for statistical biases.

Security Threat Detection

- AI can monitor logs for anomalous activity or potential AI-specific attacks.

Documentation Generation

- AI can help generate required documentation (e.g., technical docs for EU AI Act compliance ).

The AI-ARF framework represents a structured approach for human-orchestrated evaluation, potentially using AI tools, to specifically assess the trustworthiness aspects of AI components within the architecture.

Scenario: AGI/ASI Surpassing Human Evaluation

If AGI/ASI capabilities surpass human evaluation, the scenario changes dramatically:

Automated End-to-End Assessment

- An AGI/ASI could potentially perform near-instantaneous, comprehensive evaluations across all layers simultaneously. It could analyze the interplay between legal requirements, ethical guidelines, business objectives, architectural design, code implementation, and data flows with a depth humans cannot achieve.

Proactive Compliance & Design

- Instead of post-design review, AGI/ASI could be part of the design process, automatically generating compliant and ethical architectures, code, and configurations based on the rules defined across all layers. It could predict potential rule conflicts or future compliance issues.

Dynamic Rule Adaptation

- AGI/ASI could monitor changes in laws, regulations, and standards globally in real-time and automatically assess the impact on all existing entities and sub-entities, flagging necessary adaptations or even implementing them autonomously.

Redefined “Audit”

- Human audits might shift focus from checking compliance (which the AGI/ASI handles) to auditing the AGI/ASI itself – verifying its goals, reasoning processes, ethical alignment, and ensuring it hasn’t developed unforeseen harmful behaviors. The concept of “human oversight” becomes vastly more complex.

Potential Risks

Opacity

- If the AGI/ASI’s reasoning is too complex (“black box”), humans may lose the ability to understand why a design is deemed compliant or non-compliant.

Goal Misalignment

- The AGI/ASI might optimize for rule compliance in unintended ways that conflict with broader human values or ethical principles not perfectly encoded in the rules.

Over-Reliance/Complacency

- Humans might become overly reliant on the AGI/ASI, reducing critical oversight and potentially missing subtle but significant issues.

Security

- The AGI/ASI itself becomes a critical target, requiring unprecedented levels of security.

The process of evaluation and audits would become hyper-efficient and comprehensive, but the focus of human oversight would shift towards governing the evaluator itself, ensuring its alignment with human values remains paramount, a challenge central to AI safety and governance.

Keywords

- Rule-Based Entities

- Abstraction Layers

- AI Evaluation

- AGI (Artificial General Intelligence)

- ASI (Artificial Super Intelligence)

- Governance

- Risk Management

- Compliance

- Auditing

- Digital Products

- Enterprise Architecture

- Business Processes

- Technology Architecture

- Application Architecture

- Data Architecture

- Legal & Regulatory Framework

- Human Oversight

- AI-ARF

- Entity Semantics

- Evaluation Process

- AI Safety